Share this

BigQuery Pricing Mastery: How to Save Up To 70% of Costs

by Crystalloids Team on Apr 15, 2024 2:01:13 PM

BigQuery, Google's powerful data warehouse, empowers businesses to unlock valuable insights from massive datasets. But with great power comes, well, cost considerations. Understanding BigQuery pricing and implementing innovative cost-saving strategies ensures you maximize your data analysis investment.

Understanding Google BigQuery Pricing Model

Within the Google Cloud Platform (GCP), BigQuery charges for two main aspects of data management: storing your data (BigQuery storage) and analyzing it using queries (query data).

BigQuery Storage Pricing:

This refers to the space your data occupies within the Google Cloud storage. There are two pricing options for BigQuery storage:

- Active Storage: This is the default storage for data you access frequently. You pay a flat-rate price of $ 0.02 per GB per month.

- Long-term Storage: For rarely access data, you can move it to a cheaper tier at $ 0.01 per GB per month.

Query Costs (Query Data Processing):

This refers to the cost of running analyses on your data stored in BigQuery. BigQuery offers two pricing models for processing your query data:

- On-demand pricing: This is a pay-as-you-go model based on how much data your queries process (data scanned). You're charged $5 per TB scanned. This is ideal for occasional queries or workloads with unpredictable usage.

- Capacity-based pricing (BigQuery Editions): Ideal for predictable workloads with frequent queries where you can leverage flat rate pricing for potential cost savings. Here, you purchase a set amount of compute capacity (BigQuery slots) for a fixed monthly or yearly cost. You're charged within that allocated capacity, regardless of the specific amount of query data scanned within a slot. This can be significantly more cost-effective than on-demand pricing if your workloads are consistent.

Clusters are not directly related to Google BigQuery pricing, but understanding the concept is good. Clusters are groups of machines that work together to process queries. BigQuery automatically manages these clusters behind the scenes, so you don't need to worry about them for billing purposes. However, understanding that query complexity can affect how many resources (and potentially cost) a query uses is helpful.

Estimate the cost to share with your team using Google pricing calculator.

Optimizing Your BigQuery Costs

- Monitor costs closely: Utilize BigQuery's built-in cost-monitoring tools to track your spending and identify trends for optimization.

- Optimize queries: Review your query structure to reduce the amount of data processed. Techniques like partitioning and materialized views can help improve efficiency.

- Utilize cost-effective storage options: Take advantage of BigQuery's storage tiers, including long-term storage for archived data. Choose the tier that best fits your needs to reduce costs.

- Schedule queries: Run your queries during off-peak hours to take advantage of lower rates and reduce expenses. Enable data deletion: Regularly delete unnecessary data to minimize storage costs and keep your dataset lean.

- Employ a BigQuery cost audit service: Consider using professional services (such as Crystalloids) to analyse your usage to identify hidden cost-saving opportunities and optimize your spending based on the number of bytes processed.

Case Study: BigQuery Pricing Strategies for 77% Cost Reduction

Our e-commerce client faced unexpected cost surges due to inefficiencies in their BigQuery usage. A series of processes implemented by another company resulted in significant cost increases, specifically involving updating and enriching data in BigQuery hourly. This approach led to:

-

Frequent Updates: Individual row updates were driving up costs. BigQuery excels at large-scale analysis, not frequent updates.

-

Excessive Data Scanning: Queries retrieving all fields from tables resulted in unnecessary data scanning.

-

Unpartitioned Tables: Scanning all data in large tables incurred high costs.

The Optimization Journey

To address these issues, we implemented a three-pronged approach that significantly reduced our client's BigQuery monthly cost:

-

Updates and Batch Processing:

We replaced individual updates with a caching mechanism and implemented batch updates at the end of each process. This dramatically reduced the number of updates and associated storage costs. Additionally, it minimized the number of times data needed to be refreshed in BigQuery, potentially reducing the frequency of running expensive SQL queries. -

Selective Field Usage:

We meticulously analyzed the client's SQL queries to identify and retrieve only essential data types (columns) during analysis. This significantly reduced the amount of data scanned by each query, leading to substantial cost savings. By focusing on the most relevant data types, we ensured our client wasn't paying to analyze unnecessary information. -

Harnessing Partitioned Tables:

We converted the client's tables to partitioned tables based on logical divisions like date or product category. This optimized querying by allowing BigQuery to only scan the relevant partition for a specific query, minimizing the data scanned and further reducing overall costs.

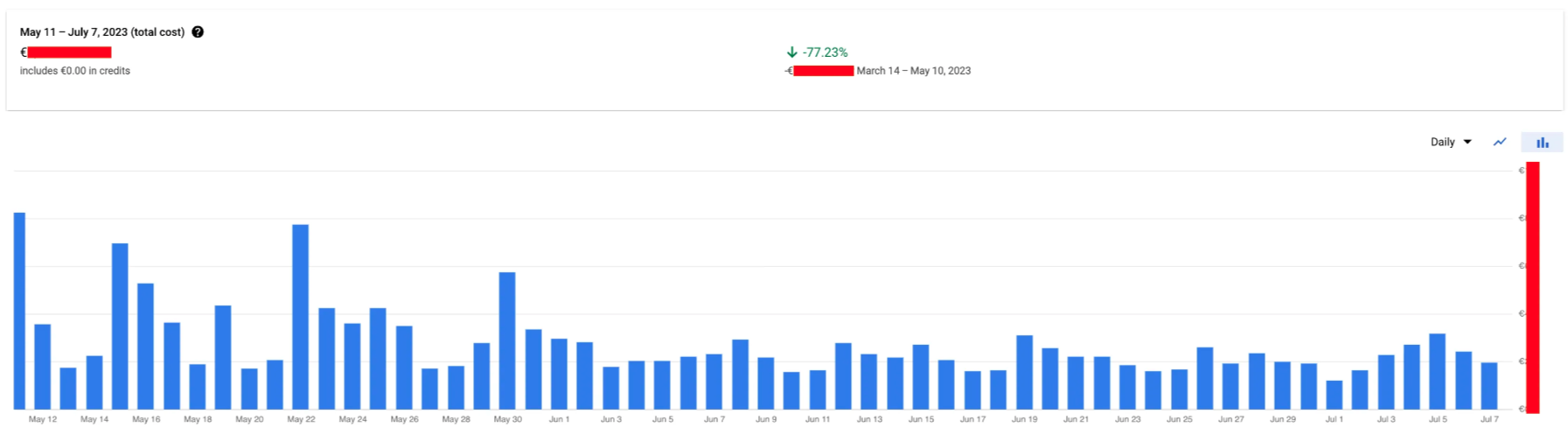

Monthly comparison of cost reduction

The Result:

The optimizations that we implemented for our client yielded remarkable results. Within 20 days, they experienced a 38% decrease in their BigQuery expenses. After one month, a side-by-side comparison with the previous month revealed a staggering 77% cost saving.

By identifying and rectifying storage and analysis pricing inefficiencies, we implemented substantial cost-saving tactics and enhanced their current processes' performance. Overall, it was a resounding success story in optimizing BigQuery pricing.

Ready to optimize your BigQuery costs?

Implement these strategies or consider seeking professional help for a tailored approach. Crystalloids offers expert services to help you minimize both per-query and data storage costs, maximizing your return on data analysis investment. This ensures you leverage the full potential of BigQuery's cost-saving features.

Share this

- April 2025 (2)

- February 2025 (2)

- January 2025 (3)

- December 2024 (1)

- November 2024 (5)

- October 2024 (2)

- September 2024 (1)

- August 2024 (1)

- July 2024 (4)

- June 2024 (2)

- May 2024 (1)

- April 2024 (4)

- March 2024 (2)

- February 2024 (2)

- January 2024 (4)

- December 2023 (1)

- November 2023 (4)

- October 2023 (4)

- September 2023 (4)

- June 2023 (2)

- May 2023 (2)

- April 2023 (1)

- March 2023 (1)

- January 2023 (4)

- December 2022 (3)

- November 2022 (5)

- October 2022 (3)

- July 2022 (1)

- May 2022 (2)

- April 2022 (2)

- March 2022 (5)

- February 2022 (3)

- January 2022 (5)

- December 2021 (5)

- November 2021 (4)

- October 2021 (2)

- September 2021 (2)

- August 2021 (3)

- July 2021 (4)

- May 2021 (2)

- April 2021 (2)

- February 2021 (2)

- January 2021 (1)

- December 2020 (1)

- October 2020 (2)

- September 2020 (1)

- August 2020 (2)

- July 2020 (2)

- June 2020 (1)

- March 2020 (2)

- February 2020 (1)

- January 2020 (1)

- December 2019 (1)

- November 2019 (3)

- October 2019 (2)

- September 2019 (3)

- August 2019 (2)

- July 2019 (3)

- June 2019 (5)

- May 2019 (2)

- April 2019 (4)

- March 2019 (2)

- February 2019 (2)

- January 2019 (4)

- December 2018 (2)

- November 2018 (1)

- October 2018 (1)

- September 2018 (2)

- August 2018 (3)

- July 2018 (3)

- May 2018 (2)

- April 2018 (4)

- March 2018 (5)

- February 2018 (2)

- January 2018 (3)

- November 2017 (2)

- October 2017 (2)